As AI business tools become increasingly common, a new question arises: Is a standard server sufficient, or do you need a dedicated AI server?

Whether you are developing applications, performing data analytics, or exploring AI capabilities for your business, this guide explains what an AI server is, its importance, and approaches to determining whether it is a worthwhile investment.

Introduction

The adoption of artificial intelligence in business is evident through the introduction of chatbots, automation, forecasting, image recognition, and data stream analysis. However, the workload from these applications cannot be sustained by the traditional, basic shared servers used for web hosting or basic applications.

In simple terms, an AI server is a high-performance, dedicated computer system used to carry out select, resource-intensive AI training or execution tasks. These tasks require high-performance training or execution of AI models and, therefore, require a high memory capacity and threshold, along with specialized hardware.

The adoption of AI tools has leveled the playing field in many industries. Whether it is large corporations, small business owners, IT professionals, freelancers, or creative agencies, the use of AI tools to enhance productivity and automate tasks has become a standard practice.

Let’s dive in and explain what an AI server is, how it differs from an ordinary server, and where it is typically located.

Key Takeaways

- AI servers are designed for demanding training and inference tasks that standard servers cannot handle.

- They use accelerators like GPUs and TPUs paired with high-bandwidth memory and fast NVMe storage for superior performance.

- Businesses that run real-time AI, custom model training, or privacy-sensitive workloads gain major speed and control advantages from dedicated AI infrastructure.

- Choosing between cloud, hybrid, or on-prem setups depends on cost, latency needs, and how often you run heavy workloads.

- UltaHost helps teams adopt AI more affordably by offering fast NVMe hosting, GPU-ready platforms, and a low-complexity way to start without buying expensive hardware.

What is an AI Server?

An AI server is a computer designed for a specific purpose to run artificial intelligence workloads. It isn’t focused on everyday things like website hosting or application processing. An AI server handles model training, real-time inference, and massive data flows that require much more power and parallel processing than a typical server.

Unlike traditional servers, which emulate desktop PCs by relying mostly on CPUs, AI servers commonly feature specialized accelerators, such as GPUs, TPUs, or FPGAs, for processing vast datasets.

They also implement high-bandwidth memory, faster storage, and upgraded networking to ensure a smooth flow of data required for modern AI models.

This design makes AI servers suitable for situations like:

- Training large machine-learning models.

- Chatbots and conversational AI that conduct real-time interactions.

- Bundling images or videos in bulk.

- Using artificial intelligence systems at the edge for low-latency response.

All in all, an AI server is not merely a faster computer. It is a computing environment designed specifically for this purpose and tuned for the requirements of modern AI.

AI Server vs Standard Server

| Feature | Standard Server | AI Server |

| Primary Use | Websites, apps | AI training, inference, data-heavy processing |

| Core Compute | Only CPUs | GPUs/TPUs/FPGAs + CPUs |

| Memory | Moderate | Very High |

| Storage | Standard SSD | High-speed NVMe + large dataset |

| Networking | Regular bandwidth | High-speed, low-latency |

| Workloads | Hosting, business apps | Chatbots, generative AI, vision, model training |

Common Use-Cases for AI Servers

AI servers are no longer just for big tech companies. As AI solutions are increasingly being incorporated into the commonly used products and services, a growing number of enterprises, agencies, and freelancers are finding that running AI workloads locally or on dedicated infrastructure is faster, cheaper, and more flexible than relying solely on cloud APIs.

These are the situations where AI servers make a real difference.

Real-Time Inference

If you are operating chatbots, recommendation engines, automated support tools, or computer-vision systems, speed counts.

You receive instant responses and predictions for your users with AI servers, as responses are not delayed due to shared cloud resources or network hops.

For example, a web agency providing a chat or search tool powered by AI can keep latency low and costs predictable by running inference on its own AI server.

AI Model Training

Many businesses no longer rely only on third-party AI APIs. They are enhancing their own models and making adjustments to improve accuracy.

To achieve this, you will need an AI server that comes with accelerated computing, large memory pools, and fast I/O.

For example, a product team may benefit from having compute that is direct and controlled for internal assistant tuning cycles by adapting an open-source LLM.

On-Site AI Deployments

Certain workloads cannot rely on cloud latency or expose sensitive data to the internet. Edge AI servers are useful when you need them.

- Decisions made instantly (e.g., robotics, industrial automation)

- Strict data-privacy controls.

- Processing for IoT or video analytics onsite.

For example, A manufacturing firm that runs vision systems for defect detection might deploy an AI server on-site.

Market Growth

With the rise of generative AI, global demand is accelerating. The AI server market was worth approximately USD 124.81 billion in 2024. Further, expert reports estimate that the AI server market will reach USD 854 billion by 2030, with a CAGR of about 38.7% (Grand View Research).

If you’re building AI features, managing large amounts of data, or offering AI-based services, your organisation must be familiar with AI servers to remain competitive.

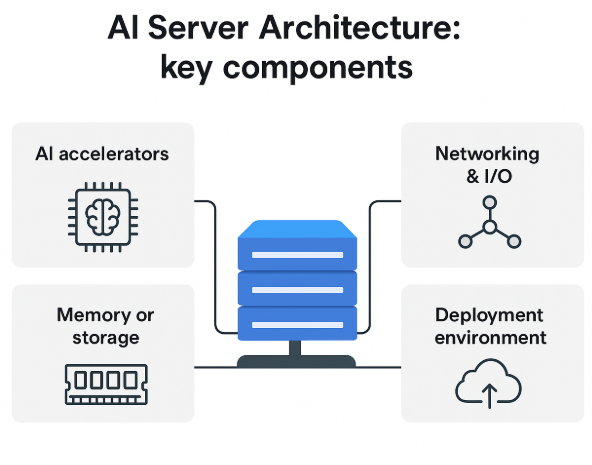

Core Architecture & Components

AI servers appear to be normal servers on the outside, but are designed quite differently on the inside. The architecture enables fast transmission of large data volumes, efficient parallel execution, and heavy-lift facilitation for training and inference.

Here is a general explanation of how an AI server works, without delving too deeply into the engineering details.

Hardware Components

Artificial Intelligence workloads work with multiple specialized hardware components. When selecting or evaluating an AI server, it is essential to focus on its key components.

CPUs + AI Accelerators

At the heart of an AI server is the CPU, but it is not carrying the full load. AI tasks rely heavily on AI accelerators such as:

- GPU is great for parallel processing and neural networks.

- TPUs tailored for deep-learning workloads.

- FPGAs can be configured to create custom AI routines.

The accelerators handle training and inference, while the CPU manages task coordination and system operations.

Memory/Storage

AI systems require rapid access to vast amounts of data. That’s why AI servers are used.

- HBM or high-bandwidth memory is required to ease the bottlenecks during training tasks.

- NVMe or SSD storage for rapid read/write cycles.

These elements facilitate smooth data transfer, especially when running larger models.

Networking & I/O

Constantly moving data, AI workloads frequently span distributed systems. AI servers rely on.

- High-speed Ethernet or fibre connections.

- Tailored cables (like NVLink) are reliable for rapid GPU communication.

- High-bandwidth I/O paths to avoid slowdowns.

Efficient networking ensures the model runs fast enough and inference is responsive.

Deployment Environments & Form-Factors

Not every AI server lives in a massive data centre. The Performance, cost, and feasibility are affected by how and where you deploy one.

On-premises vs Cloud vs Hybrid vs Edge deployments

On-premises

- When you have your own environment for hosted AI servers, you have complete control over data, security, and customisation.

- Suitable for organizations that need local processing without much delay.

Cloud-Based AI Servers

- Cloud providers offer flexible, scalable AI compute.

- Teams trying out AI or adjusting workloads on the fly are a good fit.

Hybrid Deployments

- Some organizations blend cloud and on-premises systems, utilizing the cloud for burst compute and local machines for regular inference.

- Hybrid setups balance cost, performance, and control.

Edge AI Servers

- Edge deployments are ideal for real-time applications as they are located near the data source.

- Application of robots, internet protocol applications in industries, and video processing in the domain.

Infrastructure Demands

AI servers require more than just rack space. Before deploying one, consider:

- AI accelerators consume significantly more power.

- Cooling requirements (temperature control is essential).

- Rack and physical space.

- Network upgrades for sustained high data centre.

UltaAI – Smart AI Assistant for UltaHost Clients

UltaAI is UltaHost’s intelligent support assistant, designed to help you manage hosting, domains, billing, and technical issues instantly with smart, AI-powered responses.

Choosing Whether You Need One

An AI server can offer a lot of power, but that doesn’t mean that it is always needed. The costs of hardware and infrastructure changes should always be weighed against workloads, objectives, and the available budget before making a decision.

One of the most practical ways is offered here.

Start With the Right Questions

First, consider your current or planned usage of AI.

What is your current workload?

- Training requires accelerators and high-bandwidth memory. Custom and large models are needed.

- For inference, usage can become more efficient with smaller local servers or cloud instances.

What workload are you expecting?

- For occasional tasks, learning can be done in the cloud.

- If you expect more frequent or heavier tasks, a learning model is usually more cost-effective on dedicated hardware.

What is the importance of latency with the task?

- On-demand or real-time tools may need on-prem servers. Things like vision, support bots, robotics, and the like fall into this category.

- For tasks like these, cloud resources can be more cost-effective. It is worth noting that these are important latency tasks and can tolerate some latency in their processing.

Is full control over the data needed for this task?

- Privacy concerns with data can lead some industries to prefer on-site or private AI servers.

Check Your Cost & Infrastructure Readiness

AI servers are always more demanding on infrastructure. Make sure of these;

- Do you have the power capacity to support GPU-heavy servers?

- How are your cooling and HVAC systems?

- Do you have the physical rack space or a proper hosting environment?

- Is your system prepared for hardware and maintenance costs?

- Ultimately, is your team capable of managing the setup?

Comparing Your Options

| Option | Best For | Pros | Cons |

| Standard Shared Hosting | Websites & Blogs | Affordable and easy to manage | Not suitable for AI workloads |

| VPS Hosting | Small tools | More control and custom setups | Limited for training |

| Dedicated AI Server | AI training/inference | Full control and predictable performance | Power, cooling, maintenance |

| Cloud AI | Flexible or short-term workloads | Elastic, no hardware investment | Higher cost |

If your workload varies a lot, cloud options (GPU instances, managed ML services) are safer bets. However, if you consistently run heavy workloads, having an AI server becomes a more economically wise investment over time.

Understand AI Server Basics

Learn about AI server hardware, common workloads, and deployment models.

Decision Checklist

- You may need an AI server if you check several of these:

- You regularly train or fine-tune AI models.

- You maintain real-time AI services in which even a slight delay can change outcomes.

- Cloud inference costs are unpredictable or rising rapidly.

- You handle sensitive data and prefer to keep control over your local systems.

- You have (or can easily construct) an adequate power and cooling system.

- You wish to maintain sustained economic efficiency over time for workloads.

- Your team has the necessary skills needed to manage hardware, or they prefer full autonomy over the system.

Trade-Offs & Risks

While the power of AI servers is impressive, the drawbacks are also noteworthy and should be considered by every business, agency, or freelancer. Keep these important trade-offs in mind:

- AI optimized hardware (GPUs, TPUs, high-bandwidth, etc) is expensive, and at an ongoing operational cost like cooling, electricity, and maintenance, may exceed that of a normal server.

- If your AI tasks are light, infrequent, or seasonal, you may end up overpaying for the hardware to maintain cloud-based services, while idling tasks could be a more efficient use of resources.

- More sophisticated staff and increased server configurations and optimization are needed due to the greater complexity in the infrastructure.

- AI hardware consumes significant space and requires robust cooling, particularly for foundational AI applications. Sustainability, heat output, and operational carbon footprint are becoming increasingly evident for companies seeking to reduce their environmental impact.

AI server, if load, resources, and long-term rollout plan make sense, can greatly improve operational output across the board. If not, perhaps a flexible hybrid commercial solution might be a better initial fit.

Future Trends & What’s Ahead

AI’s impact on core infrastructure is far-reaching, from freelancers to the largest corporations. Over the coming years, accessibility and deployment of AI compute resources and capabilities will be transformed.

Here are a couple of foundational changes on the immediate horizon:

| Trends | Business Implication |

| Growth in edge AI servers | AI compute performance with operational power efficiency unlocks advanced AI capabilities for smaller teams |

| Hybrid cloud and edge | The hybrid will train in the cloud and inference on the edge or at a private location. |

| Emerging innovation | Reducing heat output and advancing engineering in buildings or data centers will allow work to be completed more sustainably. Running AI workloads becomes both less operationally expensive and more sustainable at the same time. |

| Sustainability advancements | Reducing heat output and advancing engineering in buildings or data centers will allow work to be completed more sustainably. Running AI workloads becomes both less operationally expensive and more sustainable. |

| “AI-ready” hosting and managed services | These hosts have Managed AI Compute, which makes it significantly more feasible and more economical to enter the AI market. |

AI servers will continue to adopt faster, cooler, more distributed architectures, enabling organizations to deploy AI without the massive costs.

Quick Evaluation Checklist – Do you need an AI Server?

Answer this straightforward checklist to assess whether an AI server makes sense for your company. Keep it easy, to the point, and practical, focusing on routine decision-making.

Use-case fit

- In-house, need to train or fine-tune AI models?

- Did it perform high-scale inference (chat, vision, recommendation)?

- Do you require low-latency or on-premise processing for compliance or privacy?

Estimated scale

- What is the expected request volume (rate) per second/minute?

- Model complexity/size (e.g., vision models, LLMs).

- What about the intended latency: real-time, near-real-time, or batch?

Infrastructure Readiness

- How much power, cooling, and rack space do you have available?

- Are staff present to manage setup, maintenance, and monitoring?

- Can your environment host GPUs/accelerators?

Cost & Budget Fit

- What are the hardware costs associated with OPEX (i.e., power, cooling, and repairs)

- Software cost (i.e., monitoring tools, licensing, support)?

- How does this compare with the cost of the cloud, given the high usage, is the hardware worth ownership?

Future-Proofing

- Does the environment support easy scaling (more accelerators/GPUs, faster interconnects)?

- Is there a way to hybrid or edge later on in life?

- Do you want to be modular or upgradeable?

Yes, this list makes it easy for you to judge an AI server, and it is a good match for your workload, budget, and long-term ideas.

FAQ

Can I use a standard server for AI workloads?

Yes for minor or light tasks, but performance may be limited. Standard servers lack many of the optimisations (accelerators, memory, I/O) that AI workloads require.

Which organisations should consider an AI server?

Organisations that conduct sustained model training, handle large-scale inference (many users or low-latency needs), or deploy AI at the edge with specific demands. Smaller web-hosting or simple website workloads typically don’t need a dedicated AI server.

What’s the difference between cloud AI compute and owning an AI server?

Cloud AI compute offers flexibility (pay-as-you-go), no infrastructure burden, and rapid access. Owning an AI server gives more control, potential long-term cost savings for heavy workloads, but requires infrastructure, upfront investment and operational overhead.

How significant is the power or infrastructure cost of an AI server?

Quite significant. AI server deployments can draw much more power and require more cooling/space than standard servers. Given the growth in AI-optimised data-centre infrastructure, energy/cooling are major factors

Will AI servers become obsolete quickly, given rapid hardware advances?

Hardware evolves rapidly (new accelerators, form factors, edge deployments), so upgrade-path planning is important. However, the fundamental role of AI servers remains: supporting training/inference. The risk is more about being locked into outdated hardware.