How to List, Start and Stop Docker Container...

Docker is a platform that allows you to build, deploy, ...

Docker is a powerful tool that simplifies software development and deployment. It allows you to run applications in isolated containers, ensuring they work the same way on any system. Containers share the host machine’s resources, making them lightweight and efficient compared to traditional virtual machines.

However, if not properly managed, Docker containers can consume excessive memory and CPU, affecting the performance of other applications on the host system. For example, a single resource-hungry container might slow down critical services or even crash the host.

To prevent such issues, you can limit how much memory and CPU a container uses. This ensures better resource allocation, avoids system overloads, and keeps your applications running smoothly. In this guide, we’ll explore simple ways to control Docker’s resource usage effectively.

Managing Docker memory usage is crucial for maintaining system performance and ensuring resource availability. Beyond basic settings, Docker provides multiple ways to enforce memory restrictions on Linux systems, catering to various use cases.

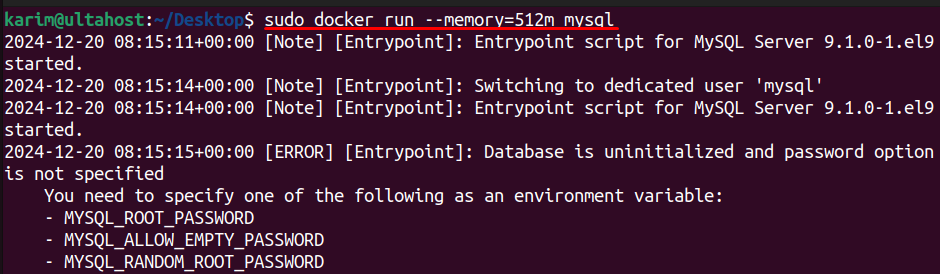

The memory flag is the simplest and most widely used method of limiting container memory. By specifying a hard cap, you ensure a container never uses more than the defined amount.

sudo docker run --memory=512m <image_name>

This command limits the container’s memory to 512MB. If the container exceeds this limit, the kernel triggers an out-of-memory (OOM) action, which may terminate the process. This is the best way to prevent one container from consuming excessive system memory.

The –memory-swap flag defines a container’s total amount of memory and swap. If swap usage is not desired, you can disable it by setting –memory-swap equal to the –memory limit:

sudo docker run --memory=512m --memory-swap=1g <image_name>

This configuration allows an additional 1g of swap, providing flexibility for containers with bursty workloads.

Unlock UltaHost Linux Server for a Docker Instance

Get the reliability of the affordable Linux operating system and the flexibility of a virtual server. Enjoy blazing-fast speeds and low latency.

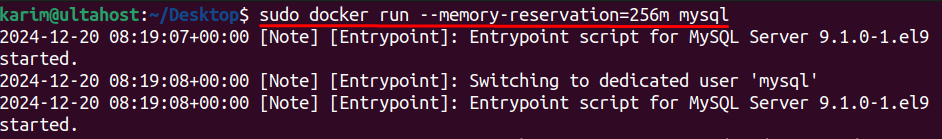

The –memory-reservation flag allows you to specify a soft memory limit. This gives containers the flexibility to exceed the limit temporarily if sufficient resources are available on the host.

sudo docker run --memory-reservation=256m <image_name>

This setting is ideal for managing containers in environments with dynamic resource demands.

If a container is already running, you can adjust its memory settings without restarting it using the docker update command. For example:

sudo docker update --memory=1g <container_id>

This method ensures that you can respond to changing application requirements in real time, offering operational flexibility.

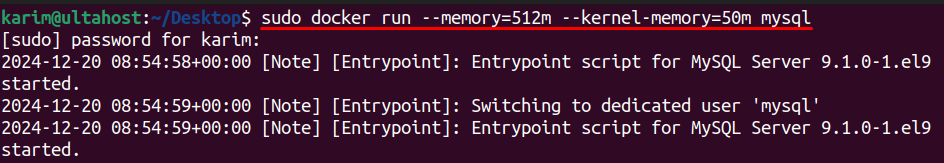

or advanced memory management, enable kernel memory accounting by passing the –kernel-memory flag. This ensures that kernel memory usage for the container is also capped. For example, to limit kernel memory to 50MB:

sudo docker run --memory=512m --kernel-memory=50m <image_name>

Kernel memory limits are crucial for containers performing extensive system-level operations.

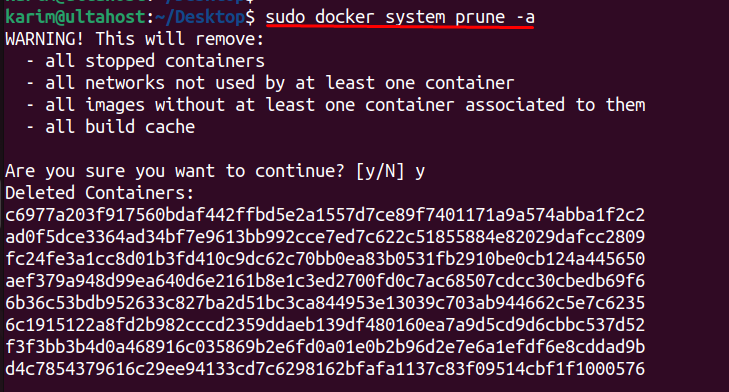

Inactive containers can consume memory resource limits, indirectly affecting performance. Regularly use docker system prune to clean up unused containers, images, and networks:

sudo docker system prune -a

This ensures optimal memory availability for active containers.

Setting memory and CPU limits ensures no single container can monopolize system resources. This avoids host slowdowns or crashes caused by excessive resource consumption.

By capping resource usage, you can maintain reliable performance for critical applications. This is especially important when running multiple containers simultaneously, preventing interference and ensuring smooth operations.

Proper resource allocation helps avoid over-provisioning, particularly in cloud environments where resources are billed based on usage. Efficient resource management minimizes unnecessary expenses on additional infrastructure.

Setting limits ensures that all containers receive an equitable share of available resources, preventing situations where some containers suffer from resource starvation while others overuse the host’s CPU and memory.

With defined resource limits, it becomes easier to track usage patterns, detect bottlenecks, and resolve issues. This simplifies performance tuning and helps in diagnosing problems effectively.

Enforcing resource limits is a standard practice in container orchestration. It enhances predictability, reduces the risk of unexpected failures, and supports a structured approach to system design and operation.

With clear resource boundaries, planning for scaling applications becomes more efficient. Resource caps help predict the behavior of containers under increased loads, enabling better scaling strategies.

Limiting resources prevents any single container from executing resource-intensive tasks that could impact the host’s overall security and performance. This isolation ensures a more secure and controlled environment.

For organizations with compliance or operational policies around resource usage, setting limits ensures adherence to defined standards and prevents unregulated consumption.

In multi-tenant setups, resource limits ensure fair usage among users or teams sharing the same infrastructure. This prevents conflicts and maintains harmony in resource allocation.

Docker allows you to deploy applications quickly by packaging them with all necessary dependencies. This ensures faster time-to-market and simplified development cycles.

Docker containers are platform-agnostic, meaning they can run on any system with Docker installed. This ensures a seamless transition between different environments, such as development, testing, and production.

Unlike traditional virtual machines, Docker containers share the host OS kernel. This reduces resource overhead and allows for running multiple containers on the same host efficiently.

Docker provides built-in networking capabilities, enabling easy communication between containers. It supports bridge networks, host networks, and custom network configurations for greater flexibility.

Docker has a rich ecosystem of tools, plugins, and pre-built container images available through Docker Hub. This accelerates development by allowing teams to leverage existing solutions.

Each container operates in its own isolated environment. This protects applications from interfering with each other and minimizes security risks associated with shared resources.

Docker is a versatile and efficient tool for containerized application deployment, offering seamless cross-platform compatibility and lightweight resource usage. However, managing container memory and CPU usage is essential to prevent resource contention, system slowdowns, and application failures. By implementing features like the –memory and –memory-swap flags, you can cap resource consumption, ensuring fair distribution and stable performance across all containers. Additional practices, such as updating limits dynamically and enabling kernel memory accounting, provide flexibility and control over resource allocation.

Enforcing these best practices not only enhances application reliability but also optimizes cost efficiency and supports scalability. Whether you are running containers in single-node setups or multi-tenant environments, resource management aligns with industry standards, improving security, compliance, and overall system performance. By maintaining strict control and limit Docker memory usage, you can ensure sustainable and predictable operations for both small-scale and enterprise-level applications.

For those desiring complete command over their server infrastructure, choose Ultahost SSH VPS hosting. Unlock a variety of performance scaling choices and enjoy total root access using SSH keys, granting you unmatched control. We offer a range of VPS plans, ensuring you find one that fits your needs.

Limiting resources prevents a single container from overloading the host system, ensuring stable performance and fair resource distribution across all containers.

The –memory flag sets a hard memory cap for a container, preventing it from exceeding the defined limit and triggering an out-of-memory action if necessary.

Yes, you can use the docker update command to change the memory settings of an active container without restarting it.

The –memory-swap flag sets the combined limit for physical memory and swap, offering flexibility for containers with bursty workloads.

The –memory flag sets a hard limit, while –memory-reservation specifies a soft limit that allows temporary resource overuse when extra memory is available.

Use the docker system prune -a command to remove unused containers, images, and networks, freeing up memory resources.

Yes, resource limits prevent a single container from monopolizing resources, reducing risks of host instability and enhancing isolation between containers.